While traditional Machine Learning (ML) techniques learn from examples (e.g. cat vs. dog), Reinforcement Learning (RL) learns from interaction with its environment. ML identifies patterns within a dataset, for example what characteristics of a photo make it a dog or a cat. Reinforcement learning, on the other hand, learns to perform a task, to take decisions, to optimize a reward given by a user – not unlike dog training when you give a cookie if the dog did well. It thus learns what the impact of your actions are on your environment. We call this a decision process.

In this context, we discriminate several important concepts:

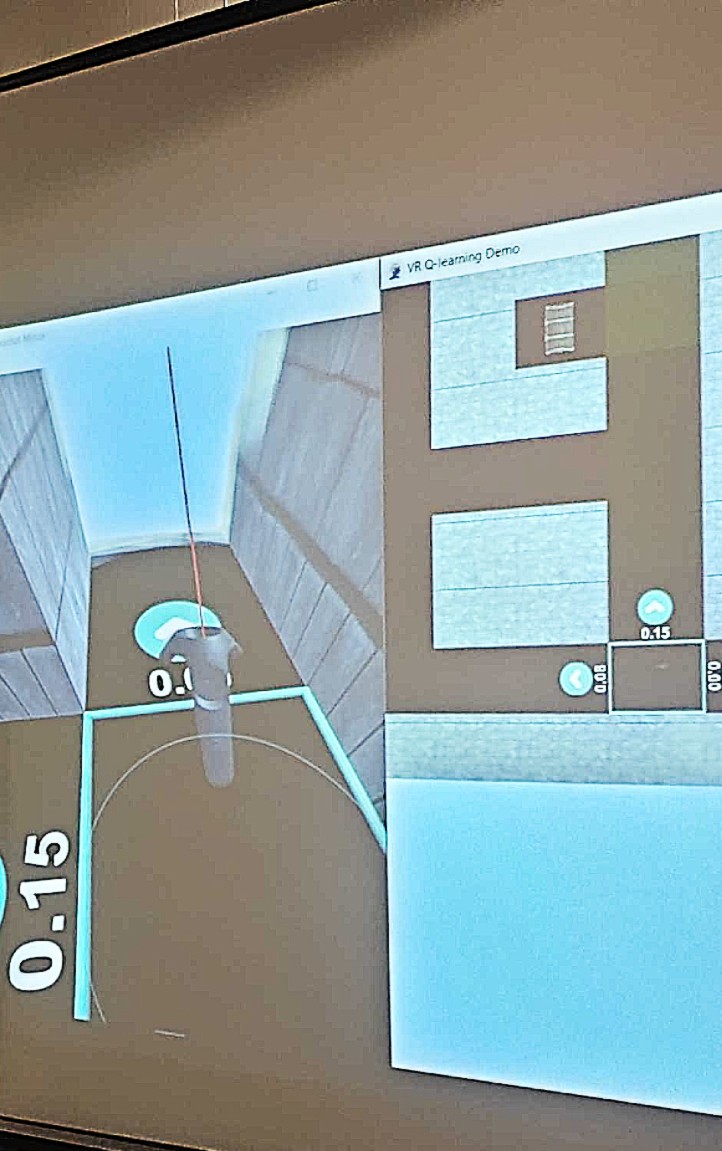

The agent, who attempts to maximize its rewards by taking actions, in an “intelligent” way, to change its environment. An example environment could be a game of chess, with each action a potential move by the player, and the board state as the environment. Ultimately, there is one reward given at the end of the game, that is: have you won the game or not?

This agent thus observes current environmental states (e.g. through sensor readings or from camera images) and executes an action, such as moving or pressing a button. After every activity, the agent is given feedback in the form of a reward (a real number) which allows it to contemplate the next state.

Once a series of actions is executed or when the agent reaches a predefined goal, the “episode” finishes. The objective of the agent is to learn which action to execute in which state so that it obtains the maximum cumulative reward. In other words, the uttermost possible reward from the first observed state until the end of the episode. The agent is, then, reset, put back to a random environmental state, and starts executing actions again.