We investigate the possibilities of Multi-objective Decision Making (MODeM), and particulary Reinforcement Learning (MORL) for decision support. Specifically, we are interested in providing guarantees with respect to user utility.

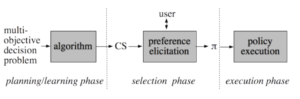

For example, imagine you aim to optimise the traffic light control for a larger European city with a population of about 1 million people. In vital areas, the traffic load will be high, and every change in the traffic light control policy may have a large impact on (i.a.,) the waiting time of people in transit (an objective), as well as pollution (another objective). Because it is impossible to minimise both simultaneously, we have to make a political decision on how to appraise different alternative policies, and their impacts on waiting time and pollution. To make an informed decision about this, we thus need to produce a set of possibly optimal policies, which we refer to as a coverage set (CS). Then we select the policy that the decision makers like best from the CS. A schematic overview of this process is provided in the following figure:

First, the multi-objective decision problem is analysed by a planning or learning algorithm. In this case, we simulate different traffic light control policies, in order to estimate their values accurately, leading to the set of possibly optimal alternatives. Second, in the selection phase, we interact with human decision makers to elicit their preferences with respect to the values of policies in the different objectives (waiting time and pollution levels). Finally, when we are sure which policy the human decision makers prefer, this policy is executed.

In this project, we aim to provide guarantees with respect to the policy executed, i.e., within computational constraints, as well as how much time we have to interact with human decision makers, can we make sure that the executed policy, π, is close – in terms of user utility – to the optimal policy we would be able to get if we would have had an infinite amount of computation time and infinitely many interactions with the human decision makers at our disposal. We investigate different MORL and multi-objective planning techniques for the planning/learning phase, as well as different selection methods for the selection phase, in order to attain these guarantees. Furthermore, we explore the possibilities for intertwining the planning/learning and selection phases, which we expect to be particularly useful when there is not much computation time available.

Selection of relevant publications from this project:

Research topics: