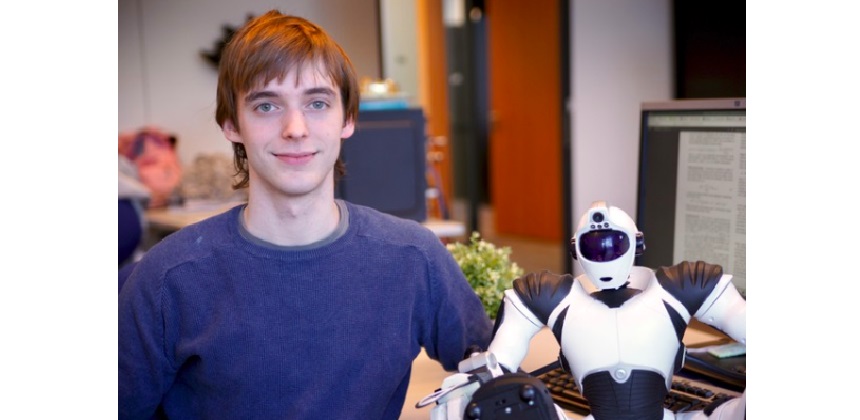

During last week’s research meeting, Denis Steckelmacher presented his research on Bootstrapped Dual Policy Iteration (BDPI). The PhD student explained his goals and how Sample-Efficient Reinforcement Learning will be employed in this process.

In reinforcement learning, we not only want the agent to learn how to perform well in a given environment, we also want it to learn quickly, that is, using as few trials (and errors) as possible. For instance, a robotic wheelchair that may collide with a wall or person while learning must learn as quickly as possible.

Denis presented a new reinforcement learning algorithm that is extremely sample-efficient. Our researcher also elaborated on Reinforcement learning algorithms and how they can be divided into three families:

With discrete actions, that is, when the actions available to the agent can be numbered between 0 and N, critic-only algorithms outperform the other ones. Actor-only algorithms are extremely sample-efficient, while actor-critic algorithms try to increase the sample-efficiency of the actor but fail to do so currently. BDPI is a new actor-critic algorithm that fundamentally differs from the other actor-critic algorithms.

Thanks to its use of “off-policy” critics, instead of “on-policy” ones as everyone else does, the actor can learn very fast. Moreover, our experiments show that removing the actor of BDPI, thus making it critic-only, lowers its sample-efficiency. BDPI is, therefore, the first actor-critic algorithm that outperforms critic-only algorithms, and whose actor provides a net benefit.